In this blog we will try to understand kiali log monitor tool usecase in ISTIO

I would request you to please revisit my old blog given below to understand ISTIO Service Mesh before movig forward.

http://siddharathadhumale.blogspot.com/2021/03/istio-setup-in-window-with-kubernetes.html

http://siddharathadhumale.blogspot.com/2021/03/istio-architecture-and-its-components.html

In above examle we had taken the example of ready made microservice

https://github.com/GoogleCloudPlatform/microservices-demo

But in this blog we will try to configure ISTIO with our own simple microservice

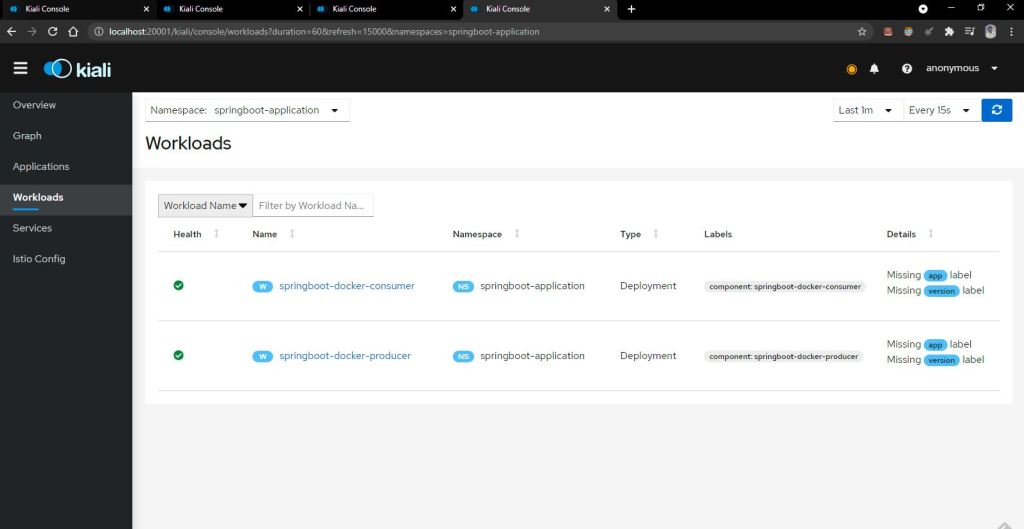

We have two microservice

1- Producer :- That will produce the simple JSON employee data

2- Consumer:- That will consume the api exposed by producer to show data on the screen.

we had already have our both microservice expose on public docker hub

i.e. producer on shdhumale/springboot-docker-producer and consumer on shdhumale/springboot-docker-consumer

Now follow below steps religiously to set up the istio on your machine and configuring our above two microservices.

Step 1:- Set up ISTIO

1- As we are using window machine we use docker desktop along with kubernetes in it. Please make sure to use the belwo configuration as an initial configuration for docker desktop with kubernetes.

Make sure you have docker and kubectl installed by checking belwo command

2- check our docker and kubernetes are empty i.e no pod or service etc. using belwo commands.

Now lets download the ISTIO setup on site https://istio.io/latest/docs/setup/getting-started/ it says you can install ISTIO using CURL command

other way is to go directly to the repository and download the same.

https://github.com/istio/istio/releases/tag/1.10.1

I downloaded the istio-1.10.1-win package. Extract that and keep the folder inside C drive

and inside C:\istio-1.10.1\bin we will get istio command line tool istioctl. Add this into window path.

Lets add that in our path and confirm the same using

C:\Users\Siddhartha>kubectl get ns

NAME STATUS AGE

default Active 7d4h

kube-node-lease Active 7d4h

kube-public Active 7d4h

kube-system Active 7d4h

as shown above we did not have any ISTIO namespace

C:\Users\Siddhartha>kubectl get pod

No resources found in default namespace.

Also we did not have any ISTIO pod.

This proves that we have empty kubernetes cluster now lets install ISTIO using this command

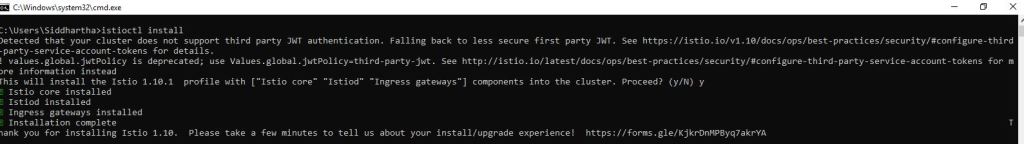

C:\Users\Siddhartha>istioctl install

Detected that your cluster does not support third party JWT authentication. Falling back to less secure first party JWT. See https://istio.io/v1.10/docs/ops/best-practices/security/#configure-third-party-service-account-tokens for details.

! values.global.jwtPolicy is deprecated; use Values.global.jwtPolicy=third-party-jwt. See http://istio.io/latest/docs/ops/best-practices/security/#configure-third-party-service-account-tokens for more information instead

This will install the Istio 1.10.1 profile with [“Istio core” “Istiod” “Ingress gateways”] components into the cluster. Proceed? (y/N) y Istio core installed

Istiod installed

Ingress gateways installed

Installation complete

Thank you for installing Istio 1.10. Please take a few minutes to tell us about your install/upgrade experience! https://forms.gle/KjkrDnMPByq7akrYA

As shown above this installation will install

Istio core and Istiod that is the demon for istio along with ingress to allow traffic to flow in and out of our minikube through ISTIO.

Now lets check what we get in namespace

C:\Users\Siddhartha>kubectl get ns

NAME STATUS AGE

default Active 27m

istio-system Active 2m53s

kube-node-lease Active 27m

kube-public Active 27m

kube-system Active 27m

As shown above we get now istio-system in name space.

Now lets check what we pod we have in this name space istio-system

C:\Users\Siddhartha>kubectl get pod -n istio-system

NAME READY STATUS RESTARTS AGE

istio-ingressgateway-f6c955cd8-z5hrt 1/1 Running 0 3m20s

istiod-58bb7c6644-kwb2k 1/1 Running 0 4m8s

Above pod in name space clearly shows that we have two pod one with istiod and one with ingress running in our minikube cluster.

Step 1:- Configuring or installing our M/S with ISTIO.

but first we need to understand how ISTIO work

Now we know how the ISTIO work. it inject envoy side car proxy one to one for each MicroService. For this we have to tell istio to inject envoy proxy to which name space. As we have deploy our all micro service in default name space and ISTIO did not deploy directly envoy proxy to default name space.It does not work like that. We need to deploy our all microservice to one of the name space that has one perticular lable istio-injection-enable and then we need to tell ISTIO will automatically inject envoy proxy into that perticular namespace.

So first we will give a lable to our springboot-application name space.

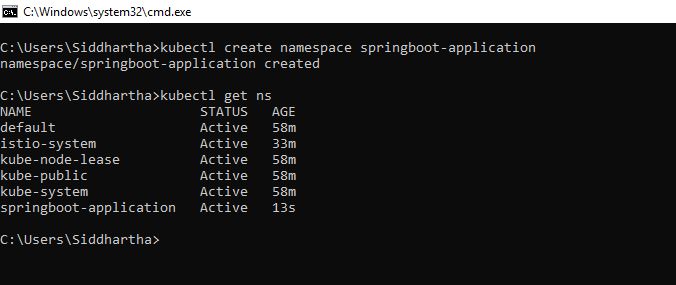

so lets first create our namespace with belwo command

kubectl create namespace springboot-application

C:\Users\Siddhartha>kubectl create namespace springboot-application

namespace/springboot-application created

Now you will be able to see our name space.

C:\Users\Siddhartha>kubectl get ns springboot-application –show-labels

NAME STATUS AGE LABELS

springboot-application Active 71s

As shown above springboot-application namespace did not have any label associated with it.

Lets give perticular label to this springboot-application namespace using below command

C:\Users\Siddhartha>kubectl label namespace springboot-application istio-injection=enabled

namespace/springboot-application labeled

Check label is applied properly using below command

C:\Users\Siddhartha>kubectl get ns springboot-application –show-labels

NAME STATUS AGE LABELS

springboot-application Active 22m istio-injection=enabled

Now lets create following files that are required to expose/deploy our microservice on the ISTIO

As you know ISTIO architeture say we need following things

1- Gateway :- that will work as an ingress to take request from the outside work and divert it to inner ISTIO component.

2- Virutal services :- This can be in between gateway/ingress and our microservice or between our microservice.for us we will use virtual service between gateway and microservice.

3- Our own component to deploy

So lets create above files one by one

1- gateway.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | apiVersion: networking.istio.io/v1alpha3kind: Gatewaymetadata: name: mysiddhuweb-gateway namespace: springboot-applicationspec: selector: istio: ingressgateway servers: - port: number: 80 name: http protocol: HTTP hosts: - "mysiddhuweb.example.com" |

2- virtual-service.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | apiVersion: networking.istio.io/v1alpha3kind: VirtualServicemetadata: name: mysiddhuweb-vs namespace: springboot-applicationspec: hosts: - "mysiddhuweb.example.com" gateways: - mysiddhuweb-gateway http: - route: - destination: host: siddhuconsumer port: number: 8090 |

3- springboot-docker-consumer.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 | apiVersion: apps/v1kind: Deploymentmetadata: name: springboot-docker-consumer namespace: springboot-applicationspec: selector: matchLabels: component: springboot-docker-consumer template: metadata: labels: component: springboot-docker-consumer spec: containers: - name: springboot-docker-consumer image: shdhumale/springboot-docker-consumer:latest env: - name: discovery.type value: single-node ports: - containerPort: 8090 name: http protocol: TCP ---apiVersion: v1kind: Servicemetadata: name: siddhuconsumer namespace: springboot-application labels: service: springboot-docker-consumerspec: type: NodePort selector: component: springboot-docker-consumer ports: - name: http port: 8090 targetPort: 8090 |

4- springboot-docker-producer.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 | apiVersion: apps/v1kind: Deploymentmetadata: name: springboot-docker-producer namespace: springboot-applicationspec: selector: matchLabels: component: springboot-docker-producer template: metadata: labels: component: springboot-docker-producer spec: containers: - name: springboot-docker-producer image: shdhumale/springboot-docker-producer:latest env: - name: discovery.type value: single-node ports: - containerPort: 8091 name: http protocol: TCP ---apiVersion: v1kind: Servicemetadata: name: siddhuproducer namespace: springboot-application labels: service: springboot-docker-producerspec: type: NodePort selector: component: springboot-docker-producer ports: - name: http port: 8091 targetPort: 8091 |

Now lets execute this file in sequence and check if we are able to deploy all the service and deployment properly

kubectl apply -f gateway.yaml

kubectl apply -f virtual-service.yaml

kubectl apply -f springboot-docker-producer.yaml

kubectl apply -f springboot-docker-consumer.yaml

C:\Istio-workspace>kubectl apply -f gateway.yaml

gateway.networking.istio.io/mysiddhuweb-gateway created

C:\Istio-workspace>kubectl apply -f virtual-service.yaml

virtualservice.networking.istio.io/mysiddhuweb-vs created

C:\Istio-workspace>kubectl apply -f springboot-docker-producer.yaml

deployment.apps/springboot-docker-producer created

service/siddhuproducer created

C:\Istio-workspace>kubectl apply -f springboot-docker-consumer.yaml

deployment.apps/springboot-docker-consumer created

service/siddhuconsumer created

now check the pod conditions

You can see we have 2 pod for each MS the second pod is Envoy proxy that is injected by the ISTIO.

C:\Users\Siddhartha>kubectl get pods -n springboot-application -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

springboot-docker-consumer-69cd4b98f-xzhgs 2/2 Running 0 7m3s 10.1.0.9 docker-desktop

springboot-docker-producer-6866686c74-g4w9k 2/2 Running 0 7m13s 10.1.0.8 docker-desktop

now check for both the pod we have two container 1 for original microservice and other for Envou proxy.

C:\Users\Siddhartha>kubectl describe pod springboot-docker-consumer-69cd4b98f-xzhgs -n springboot-application

Name: springboot-docker-consumer-69cd4b98f-xzhgs

Namespace: springboot-application

Priority: 0

Node: docker-desktop/192.168.65.4

Start Time: Fri, 18 Jun 2021 14:13:14 +0530

Labels: component=springboot-docker-consumer

istio.io/rev=default

pod-template-hash=69cd4b98f

security.istio.io/tlsMode=istio

service.istio.io/canonical-name=springboot-docker-consumer

service.istio.io/canonical-revision=latest

Annotations: kubectl.kubernetes.io/default-container: springboot-docker-consumer

kubectl.kubernetes.io/default-logs-container: springboot-docker-consumer

prometheus.io/path: /stats/prometheus

prometheus.io/port: 15020

prometheus.io/scrape: true

sidecar.istio.io/status:

{"initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["istio-envoy","istio-data","istio-podinfo","istiod-ca-cert"],"ima...

Status: Running

IP: 10.1.0.9

IPs:

IP: 10.1.0.9

Controlled By: ReplicaSet/springboot-docker-consumer-69cd4b98f

Init Containers:

istio-init:

Container ID: docker://71f597cf1d9f2be04ddeeb16913f4f7d20b3cf2f5e13375c15a78dcd497652f3

Image: docker.io/istio/proxyv2:1.10.1

Image ID: docker-pullable://istio/proxyv2@sha256:d9b295da022ad826c54d5bb49f1f2b661826efd8c2672b2f61ddc2aedac78cfc

Port: <none>

Host Port: <none>

Args:

istio-iptables

-p

15001

-z

15006

-u

1337

-m

REDIRECT

-i

*

-x

-b

*

-d

15090,15021,15020

State: Terminated

Reason: Completed

Exit Code: 0

Started: Fri, 18 Jun 2021 14:13:23 +0530

Finished: Fri, 18 Jun 2021 14:13:23 +0530

Ready: True

Restart Count: 0

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 100m

memory: 128Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-f9gb7 (ro)

Containers:

springboot-docker-consumer:

Container ID: docker://f7bcbc66e99d2e526dc5aa1b1f2b7fbc61d86a17e85a201aa487584e858a3bba

Image: shdhumale/springboot-docker-consumer:latest

Image ID: docker-pullable://shdhumale/springboot-docker-consumer@sha256:cf56fa3d5b8070aca9e13aec1bad043b59f0df08ee44ad77317f24d39afcdc24

Port: 8090/TCP

Host Port: 0/TCP

State: Running

Started: Fri, 18 Jun 2021 14:19:06 +0530

Ready: True

Restart Count: 0

Environment:

discovery.type: single-node

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-f9gb7 (ro)

istio-proxy:

Container ID: docker://f4c4ed6afc332767c1251010efc1eb776f8bb03633dc51a76af5b23ca551819a

Image: docker.io/istio/proxyv2:1.10.1

Image ID: docker-pullable://istio/proxyv2@sha256:d9b295da022ad826c54d5bb49f1f2b661826efd8c2672b2f61ddc2aedac78cfc

Port: 15090/TCP

Host Port: 0/TCP

Args:

proxy

sidecar

--domain

$(POD_NAMESPACE).svc.cluster.local

--serviceCluster

springboot-docker-consumer.springboot-application

--proxyLogLevel=warning

--proxyComponentLogLevel=misc:error

--log_output_level=default:info

--concurrency

2

State: Running

Started: Fri, 18 Jun 2021 14:19:08 +0530

Ready: True

Restart Count: 0

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 100m

memory: 128Mi

Readiness: http-get http://:15021/healthz/ready delay=1s timeout=3s period=2s #success=1 #failure=30

Environment:

JWT_POLICY: first-party-jwt

PILOT_CERT_PROVIDER: istiod

CA_ADDR: istiod.istio-system.svc:15012

POD_NAME: springboot-docker-consumer-69cd4b98f-xzhgs (v1:metadata.name)

POD_NAMESPACE: springboot-application (v1:metadata.namespace)

INSTANCE_IP: (v1:status.podIP)

SERVICE_ACCOUNT: (v1:spec.serviceAccountName)

HOST_IP: (v1:status.hostIP)

CANONICAL_SERVICE: (v1:metadata.labels['service.istio.io/canonical-name'])

CANONICAL_REVISION: (v1:metadata.labels['service.istio.io/canonical-revision'])

PROXY_CONFIG: {}

ISTIO_META_POD_PORTS: [

{"name":"http","containerPort":8090,"protocol":"TCP"}

]

ISTIO_META_APP_CONTAINERS: springboot-docker-consumer

ISTIO_META_CLUSTER_ID: Kubernetes

ISTIO_META_INTERCEPTION_MODE: REDIRECT

ISTIO_META_WORKLOAD_NAME: springboot-docker-consumer

ISTIO_META_OWNER: kubernetes://apis/apps/v1/namespaces/springboot-application/deployments/springboot-docker-consumer

ISTIO_META_MESH_ID: cluster.local

TRUST_DOMAIN: cluster.local

Mounts:

/etc/istio/pod from istio-podinfo (rw)

/etc/istio/proxy from istio-envoy (rw)

/var/lib/istio/data from istio-data (rw)

/var/run/secrets/istio from istiod-ca-cert (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-f9gb7 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

istio-envoy:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit: <unset>

istio-data:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

istio-podinfo:

Type: DownwardAPI (a volume populated by information about the pod)

Items:

metadata.labels -> labels

metadata.annotations -> annotations

limits.cpu -> cpu-limit

requests.cpu -> cpu-request

istiod-ca-cert:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: istio-ca-root-cert

Optional: false

default-token-f9gb7:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-f9gb7

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 9m14s default-scheduler Successfully assigned springboot-application/springboot-docker-consumer-69cd4b98f-xzhgs to docker-desktop

Normal Pulled 9m7s kubelet Container image "docker.io/istio/proxyv2:1.10.1" already present on machine

Normal Created 9m5s kubelet Created container istio-init

Normal Started 9m3s kubelet Started container istio-init

Normal Pulling 9m3s kubelet Pulling image "shdhumale/springboot-docker-consumer:latest"

Normal Pulled 3m23s kubelet Successfully pulled image "shdhumale/springboot-docker-consumer:latest" in 5m39.6325536s

Normal Created 3m21s kubelet Created container springboot-docker-consumer

Normal Started 3m20s kubelet Started container springboot-docker-consumer

Normal Pulled 3m20s kubelet Container image "docker.io/istio/proxyv2:1.10.1" already present on machine

Normal Created 3m19s kubelet Created container istio-proxy

Normal Started 3m18s kubelet Started container istio-proxy

C:\Users\Siddhartha>kubectl describe pod springboot-docker-producer-6866686c74-g4w9k -n springboot-application

Name: springboot-docker-producer-6866686c74-g4w9k

Namespace: springboot-application

Priority: 0

Node: docker-desktop/192.168.65.4

Start Time: Fri, 18 Jun 2021 14:13:03 +0530

Labels: component=springboot-docker-producer

istio.io/rev=default

pod-template-hash=6866686c74

security.istio.io/tlsMode=istio

service.istio.io/canonical-name=springboot-docker-producer

service.istio.io/canonical-revision=latest

Annotations: kubectl.kubernetes.io/default-container: springboot-docker-producer

kubectl.kubernetes.io/default-logs-container: springboot-docker-producer

prometheus.io/path: /stats/prometheus

prometheus.io/port: 15020

prometheus.io/scrape: true

sidecar.istio.io/status:

{"initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["istio-envoy","istio-data","istio-podinfo","istiod-ca-cert"],"ima...

Status: Running

IP: 10.1.0.8

IPs:

IP: 10.1.0.8

Controlled By: ReplicaSet/springboot-docker-producer-6866686c74

Init Containers:

istio-init:

Container ID: docker://1bb4e76bc3f0262665427207bc1c84218cf67f3bbe933770d7db7ace9fa075a2

Image: docker.io/istio/proxyv2:1.10.1

Image ID: docker-pullable://istio/proxyv2@sha256:d9b295da022ad826c54d5bb49f1f2b661826efd8c2672b2f61ddc2aedac78cfc

Port: <none>

Host Port: <none>

Args:

istio-iptables

-p

15001

-z

15006

-u

1337

-m

REDIRECT

-i

*

-x

-b

*

-d

15090,15021,15020

State: Terminated

Reason: Completed

Exit Code: 0

Started: Fri, 18 Jun 2021 14:13:12 +0530

Finished: Fri, 18 Jun 2021 14:13:12 +0530

Ready: True

Restart Count: 0

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 100m

memory: 128Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-f9gb7 (ro)

Containers:

springboot-docker-producer:

Container ID: docker://441336e7bcbe3a696306802f397c685348f26e7d966b16ea75a4661808d451f6

Image: shdhumale/springboot-docker-producer:latest

Image ID: docker-pullable://shdhumale/springboot-docker-producer@sha256:1aeb1cd85f71cfbaca70bc00cc707d1bfcaf381875d3e438f1d2c06cd96cfaaf

Port: 8091/TCP

Host Port: 0/TCP

State: Running

Started: Fri, 18 Jun 2021 14:18:51 +0530

Ready: True

Restart Count: 0

Environment:

discovery.type: single-node

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-f9gb7 (ro)

istio-proxy:

Container ID: docker://ccdff6dcc4f854663e1ff9782a9eb78b59b97bc8f661ac14cc954ecb30a98311

Image: docker.io/istio/proxyv2:1.10.1

Image ID: docker-pullable://istio/proxyv2@sha256:d9b295da022ad826c54d5bb49f1f2b661826efd8c2672b2f61ddc2aedac78cfc

Port: 15090/TCP

Host Port: 0/TCP

Args:

proxy

sidecar

--domain

$(POD_NAMESPACE).svc.cluster.local

--serviceCluster

springboot-docker-producer.springboot-application

--proxyLogLevel=warning

--proxyComponentLogLevel=misc:error

--log_output_level=default:info

--concurrency

2

State: Running

Started: Fri, 18 Jun 2021 14:18:54 +0530

Ready: True

Restart Count: 0

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 100m

memory: 128Mi

Readiness: http-get http://:15021/healthz/ready delay=1s timeout=3s period=2s #success=1 #failure=30

Environment:

JWT_POLICY: first-party-jwt

PILOT_CERT_PROVIDER: istiod

CA_ADDR: istiod.istio-system.svc:15012

POD_NAME: springboot-docker-producer-6866686c74-g4w9k (v1:metadata.name)

POD_NAMESPACE: springboot-application (v1:metadata.namespace)

INSTANCE_IP: (v1:status.podIP)

SERVICE_ACCOUNT: (v1:spec.serviceAccountName)

HOST_IP: (v1:status.hostIP)

CANONICAL_SERVICE: (v1:metadata.labels['service.istio.io/canonical-name'])

CANONICAL_REVISION: (v1:metadata.labels['service.istio.io/canonical-revision'])

PROXY_CONFIG: {}

ISTIO_META_POD_PORTS: [

{"name":"http","containerPort":8091,"protocol":"TCP"}

]

ISTIO_META_APP_CONTAINERS: springboot-docker-producer

ISTIO_META_CLUSTER_ID: Kubernetes

ISTIO_META_INTERCEPTION_MODE: REDIRECT

ISTIO_META_WORKLOAD_NAME: springboot-docker-producer

ISTIO_META_OWNER: kubernetes://apis/apps/v1/namespaces/springboot-application/deployments/springboot-docker-producer

ISTIO_META_MESH_ID: cluster.local

TRUST_DOMAIN: cluster.local

Mounts:

/etc/istio/pod from istio-podinfo (rw)

/etc/istio/proxy from istio-envoy (rw)

/var/lib/istio/data from istio-data (rw)

/var/run/secrets/istio from istiod-ca-cert (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-f9gb7 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

istio-envoy:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

SizeLimit: <unset>

istio-data:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

istio-podinfo:

Type: DownwardAPI (a volume populated by information about the pod)

Items:

metadata.labels -> labels

metadata.annotations -> annotations

limits.cpu -> cpu-limit

requests.cpu -> cpu-request

istiod-ca-cert:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: istio-ca-root-cert

Optional: false

default-token-f9gb7:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-f9gb7

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 10m default-scheduler Successfully assigned springboot-application/springboot-docker-producer-6866686c74-g4w9k to docker-desktop

Warning FailedMount 10m kubelet MountVolume.SetUp failed for volume "istiod-ca-cert" : failed to sync configmap cache: timed out waiting for the condition

Normal Pulled 10m kubelet Container image "docker.io/istio/proxyv2:1.10.1" already present on machine

Normal Created 10m kubelet Created container istio-init

Normal Started 9m59s kubelet Started container istio-init

Normal Pulling 9m58s kubelet Pulling image "shdhumale/springboot-docker-producer:latest"

Normal Pulled 4m25s kubelet Successfully pulled image "shdhumale/springboot-docker-producer:latest" in 5m33.0969143s

Normal Created 4m21s kubelet Created container springboot-docker-producer

Normal Started 4m20s kubelet Started container springboot-docker-producer

Normal Pulled 4m20s kubelet Container image "docker.io/istio/proxyv2:1.10.1" already present on machine

Normal Created 4m18s kubelet Created container istio-proxy

Normal Started 4m17s kubelet Started container istio-proxy

Warning Unhealthy 4m16s kubelet Readiness probe failed: Get "http://10.1.0.8:15021/healthz/ready": dial tcp 10.1.0.8:15021: connect: connection refusedNow we have all things are setup

1- Istio core with Istiod setup

2- We have given the label to springboot-application namespace

3- We have installed microservice

Now lets take important aspect of the ISTIO and that is logging in distributed environment.

If you see the download ISTIO C:\istio-1.9.2\samples\addons you will find many yml file that will configure

prometheus, grafana, jaeger, kiali and zipkin

Note:- zipkin yml is inside extra folder i.e. C:\istio-1.9.2\samples\addons\extras

Now lets run this yml files and see on ui how it help us in debugging and monitoring the applications.

Please refer to the below documentation also for more informations.

https://istio.io/latest/docs/tasks/observability/distributed-tracing/

Now lets apply all this kubernetes yml file as shown below

C:\Users\Siddhartha>kubectl apply -f C:\istio-1.10.1\samples\addons

serviceaccount/grafana unchanged

configmap/grafana unchanged

service/grafana unchanged

deployment.apps/grafana configured

configmap/istio-grafana-dashboards configured

configmap/istio-services-grafana-dashboards configured

deployment.apps/jaeger unchanged

service/tracing unchanged

service/zipkin unchanged

service/jaeger-collector unchanged

customresourcedefinition.apiextensions.k8s.io/monitoringdashboards.monitoring.kiali.io unchanged

serviceaccount/kiali unchanged

configmap/kiali unchanged

clusterrole.rbac.authorization.k8s.io/kiali-viewer unchanged

clusterrole.rbac.authorization.k8s.io/kiali unchanged

clusterrolebinding.rbac.authorization.k8s.io/kiali unchanged

role.rbac.authorization.k8s.io/kiali-controlplane unchanged

rolebinding.rbac.authorization.k8s.io/kiali-controlplane unchanged

service/kiali unchanged

deployment.apps/kiali unchanged

monitoringdashboard.monitoring.kiali.io/envoy created

monitoringdashboard.monitoring.kiali.io/go created

monitoringdashboard.monitoring.kiali.io/kiali created

monitoringdashboard.monitoring.kiali.io/micrometer-1.0.6-jvm-pool created

monitoringdashboard.monitoring.kiali.io/micrometer-1.0.6-jvm created

monitoringdashboard.monitoring.kiali.io/micrometer-1.1-jvm created

monitoringdashboard.monitoring.kiali.io/microprofile-1.1 created

monitoringdashboard.monitoring.kiali.io/microprofile-x.y created

monitoringdashboard.monitoring.kiali.io/nodejs created

monitoringdashboard.monitoring.kiali.io/quarkus created

monitoringdashboard.monitoring.kiali.io/springboot-jvm-pool created

monitoringdashboard.monitoring.kiali.io/springboot-jvm created

monitoringdashboard.monitoring.kiali.io/springboot-tomcat created

monitoringdashboard.monitoring.kiali.io/thorntail created

monitoringdashboard.monitoring.kiali.io/tomcat created

monitoringdashboard.monitoring.kiali.io/vertx-client created

monitoringdashboard.monitoring.kiali.io/vertx-eventbus created

monitoringdashboard.monitoring.kiali.io/vertx-jvm created

monitoringdashboard.monitoring.kiali.io/vertx-pool created

monitoringdashboard.monitoring.kiali.io/vertx-server created

serviceaccount/prometheus unchanged

configmap/prometheus unchanged

clusterrole.rbac.authorization.k8s.io/prometheus unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus unchanged

service/prometheus unchanged

deployment.apps/prometheus configured

now lets check how many pods we were havig in istio-system name space

C:\Users\Siddhartha>kubectl get pod -n istio-system

C:\Users\Siddhartha>kubectl get pod -n istio-system

NAME READY STATUS RESTARTS AGE

grafana-f766d6c97-5ksx5 1/1 Running 0 5m32s

istio-ingressgateway-f6c955cd8-z5hrt 1/1 Running 0 95m

istiod-58bb7c6644-kwb2k 1/1 Running 0 95m

jaeger-7f78b6fb65-n2lnd 1/1 Running 0 5m30s

kiali-85c8cdd5b5-w5h6x 1/1 Running 0 5m20s

prometheus-69f7f4d689-zqccp 2/2 Running 0 5m16s

Now as shown above in past we have only 2 pod istiod-58bb7c6644-kwb2k and istio-ingressgateway-f6c955cd8-z5hrt but now we have all other pod for respective yml files running in name space istio-system.

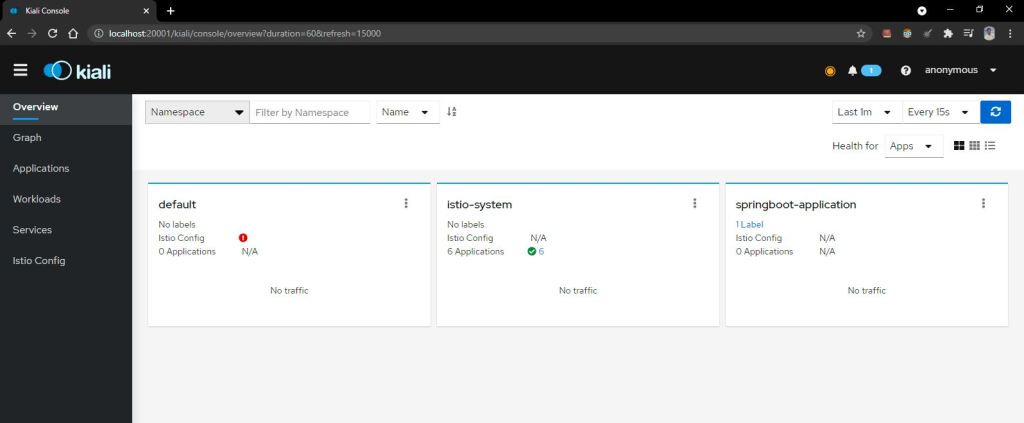

Please refer to the documentation and see what these pod do for you in ISTIO. prometheus, kiali, jaeger and grafana

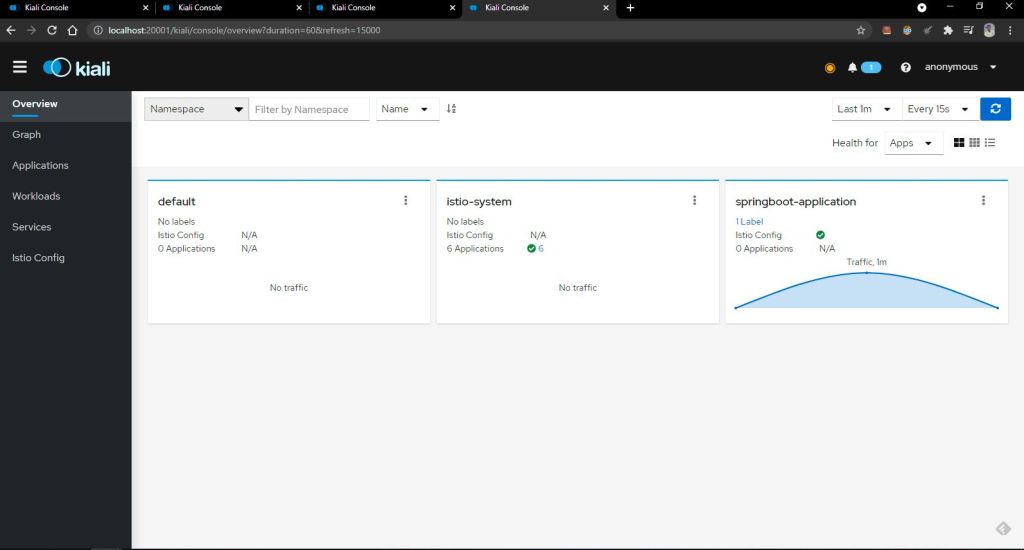

first get the service and then do port-forward kiali port forward and open it into the browser so that we can access it url.

C:\Users\Siddhartha>kubectl get svc -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana ClusterIP 10.109.209.120 3000/TCP 11m

istio-ingressgateway LoadBalancer 10.103.102.200 localhost 15021:30681/TCP,80:31647/TCP,443:30106/TCP 100m

istiod ClusterIP 10.96.183.7 15010/TCP,15012/TCP,443/TCP,15014/TCP 101m

jaeger-collector ClusterIP 10.104.166.214 14268/TCP,14250/TCP 11m

kiali ClusterIP 10.110.23.26 20001/TCP,9090/TCP 11m

prometheus ClusterIP 10.103.97.109 9090/TCP 11m

tracing ClusterIP 10.104.46.92 80/TCP 11m

zipkin ClusterIP 10.105.186.26 9411/TCP 11m

C:\Users\Siddhartha>kubectl port-forward svc/kiali -n istio-system 20001

Forwarding from 127.0.0.1:20001 -> 20001

Forwarding from [::1]:20001 -> 20001

Now lets check we are able to access our producer and consumer using forward port

C:\Users\Siddhartha>kubectl get pod -n springboot-application

NAME READY STATUS RESTARTS AGE

springboot-docker-consumer-69cd4b98f-xzhgs 2/2 Running 0 40m

springboot-docker-producer-6866686c74-g4w9k 2/2 Running 0 40m

Lets check if producer microservice is working

kubectl port-forward springboot-docker-producer-6866686c74-g4w9k 8091:8091 -n springboot-application

C:\Users\Siddhartha>kubectl port-forward springboot-docker-producer-6866686c74-g4w9k 8091:8091 -n springboot-application

Forwarding from 127.0.0.1:8091 -> 8091

Forwarding from [::1]:8091 -> 8091

Handling connection for 8091

Lets check if consumer microservice is working

kubectl port-forward springboot-docker-consumer-69cd4b98f-xzhgs 8090:8090 -n springboot-application

C:\Users\Siddhartha>kubectl port-forward springboot-docker-consumer-69cd4b98f-xzhgs 8090:8090 -n springboot-application

Forwarding from 127.0.0.1:8090 -> 8090

Forwarding from [::1]:8090 -> 8090

Handling connection for 8090

You will be able to see all the graph properly on the Kiali

Now lets keep all this yaml files into one

all-in-one.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 | apiVersion: networking.istio.io/v1alpha3kind: Gatewaymetadata: name: mysiddhuweb-gateway namespace: springboot-applicationspec: selector: istio: ingressgateway servers: - port: number: 80 name: http protocol: HTTP hosts: - "mysiddhuweb.example.com"---apiVersion: networking.istio.io/v1alpha3kind: VirtualServicemetadata: name: mysiddhuweb-vs namespace: springboot-applicationspec: hosts: - "mysiddhuweb.example.com" gateways: - mysiddhuweb-gateway http: - route: - destination: host: siddhuconsumer port: number: 8090---apiVersion: apps/v1kind: Deploymentmetadata: name: springboot-docker-producer namespace: springboot-applicationspec: selector: matchLabels: component: springboot-docker-producer template: metadata: labels: component: springboot-docker-producer spec: containers: - name: springboot-docker-producer image: shdhumale/springboot-docker-producer:latest env: - name: discovery.type value: single-node ports: - containerPort: 8091 name: http protocol: TCP ---apiVersion: v1kind: Servicemetadata: name: siddhuproducer namespace: springboot-application labels: service: springboot-docker-producerspec: type: NodePort selector: component: springboot-docker-producer ports: - name: http port: 8091 targetPort: 8091---apiVersion: apps/v1kind: Deploymentmetadata: name: springboot-docker-consumer namespace: springboot-applicationspec: selector: matchLabels: component: springboot-docker-consumer template: metadata: labels: component: springboot-docker-consumer spec: containers: - name: springboot-docker-consumer image: shdhumale/springboot-docker-consumer:latest env: - name: discovery.type value: single-node ports: - containerPort: 8090 name: http protocol: TCP ---apiVersion: v1kind: Servicemetadata: name: siddhuconsumer namespace: springboot-application labels: service: springboot-docker-consumerspec: type: NodePort selector: component: springboot-docker-consumer ports: - name: http port: 8090 targetPort: 8090 |

and when ever required use below command to install and delete the pods.

kubectl apply -f all-in-one.yaml

kubectl delete -f all-in-one.yaml

Note:- To see the traffic flow you need to execute the url in loop so use below comments

C:\Users\Siddhartha>curl http://localhost:8090/getEmployee?%5B1-2000%5D; sleep 2;

Play with all the options of Kiali graph and verify how good and strong monitor tool it is ..

https://github.com/shdhumale/kialiyaml.git

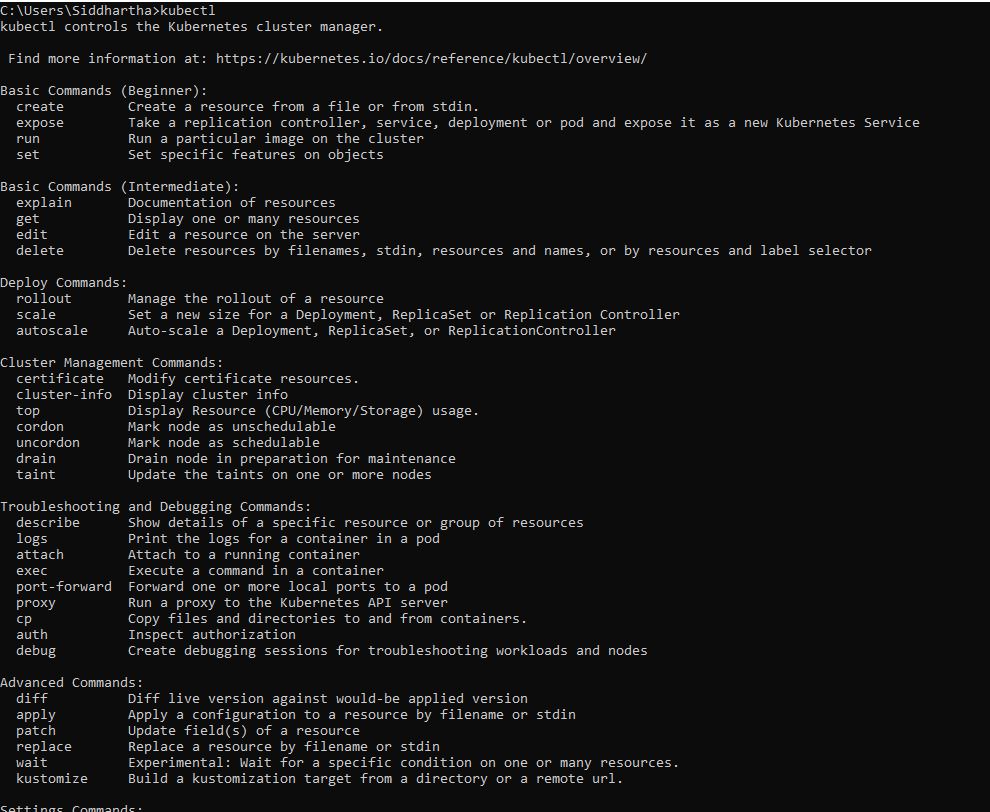

Note :- Some of the useful command that you will need frequent while working with this poc are given below

kubectl delete -f gateway.yaml

kubectl delete -f virtual-service.yaml

kubectl delete -f springboot-docker-producer.yaml

kubectl delete -f springboot-docker-consumer.yaml

kubectl apply -f gateway.yaml

kubectl apply -f virtual-service.yaml

kubectl apply -f springboot-docker-producer.yaml

kubectl apply -f springboot-docker-consumer.yaml

kubectl apply -f all-in-one.yaml

kubectl delete -f all-in-one.yaml

kubectl get pods -n springboot-application -o wide

kubectl describe pod -n springboot-application

kubectl get pod -n istio-system

kubectl get svc -n istio-system

kubectl get ns

kubectl get pod

kubectl create namespace springboot-application

kubectl get ns springboot-application –show-labels

kubectl label namespace springboot-application istio-injection=enabled

kubectl port-forward svc/kiali -n istio-system 20001

kubectl port-forward 8090:8090 -n springboot-application

kubectl port-forward 8091:8091 -n springboot-application

No comments:

Post a Comment