In last example we had seen how we have used the MySql Source to connect to MySQL and depending on CRUD operation DB respective message is inserted in the Kafka Topic. This was achieved using Kafka connect source. Now lets work on Kafka Connect Sink MYSQL so that when ever there is a message in the Kafka Topic it will be inserted into the MySQL DB using sink connnectors. Please note we are using confluent Kafka for this example.

For this we have to do following changes

1- Create a folder if not exist kafka-connect-jdbc inside C:\confluent-community-6.0.1\confluent-6.0.1\share\java if does not exist and add following jar inside it mysql-connector-java-5.1.49-bin.jar

2- Create a folder if not exist kafka-connect-jdbc inside C:\confluent-community-6.0.1\confluent-6.0.1\etc if does not exist and add following property files inside it sink-quickstart-mysql.properties

name=test_mysql_sink

connector.class=io.confluent.connect.jdbc.JdbcSinkConnector

tasks.max=1

connection.url=jdbc:mysql://localhost:3306/test?user=root&password=root

auto.create=true

value.converter.schema.registry.url=http://localhost:8081

key.converter.schema.registry.url=http://localhost:8081

topics.regex:my-replicated-topic-siddhusiddhu

key.converter=org.apache.kafka.connect.json.JsonConverter

key.converter.schemas.enable=false

value.converter=org.apache.kafka.connect.json.JsonConverter

key.converter.schemas.enable=false

insert.mode=insert

Follow below steps religiously

1- Start Zookeeper

2- Start kafka Server

3- Now start the connect using below command

connect-standalone.bat C:\confluent-community-6.0.1\confluent-6.0.1\etc\kafka\connect-standalone.properties C:\confluent-community-6.0.1\confluent-6.0.1\etc\kafka-connect-jdbc\sink-quickstart-mysql.properties

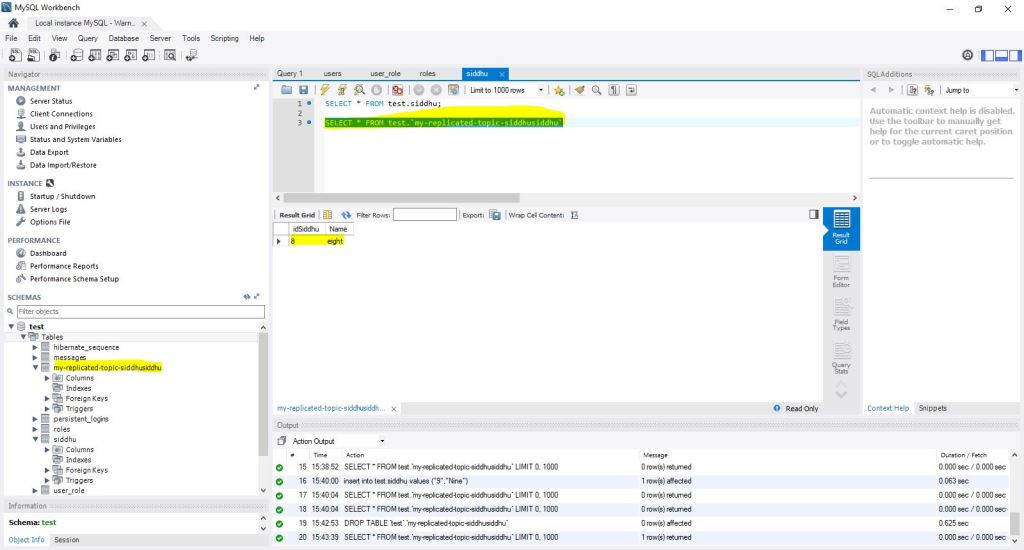

4- You will be able to see a new table created in the schema with the data entered in it as shown below.

No comments:

Post a Comment